Even worse, the pilots and the airlines didn’t even know the sensor or associated software control existed and could do that.

Even worse, the pilots and the airlines didn’t even know the sensor or associated software control existed and could do that.

let’s see if we can find supporting information on this answer elsewhere or, maybe ask the same question a different way to see if the new answer(s) seem to line up

Yeah, that’s probably the best way to go about it, but still requires some foundational knowledge on your part. For example, in a recent study I worked on we found that programming students struggle hard when the LLM output is wrong and they don’t know enough to understand why. They then tend to trust the LLM anyways and end up prompting variations of the same thing over and over again to no avail. Other studies similarly found that while good students can work faster with AI, many others are actually worse off due to being misled.

I still see them largely as black boxes

The crazy part is that they are, even for the researchers that came up with them. Sure we can understand how the data flows from input to output, but realistically not a single person in the world could look at all of the weights in an LLM and tell you what it has learned. Basically everything we know about their capabilities on tasks is based on just trying it out and seeing how well it works. Hell, even “prompt engineers” are making a lot of their decisions based on vibes only.

I don’t know if it’s just my age/experience or some kind of innate “horse sense” But I tend to do alright with detecting shit responses, whether they be human trolls or an LLM that is lying through its virtual teeth

I’m not sure how you would do that if you are asking about something you don’t have expertise in yet, as it takes the exact same authoritative tone no matter whether the information is real.

Perhaps with a reasonable prompt an LLM can be more honest about when it’s hallucinating?

So far, research suggests this is not possible (unsurprisingly, given the nature of LLMs). Introspective outputs, such as certainty or justifications for decisions, do not map closely to the LLM’s actual internal state.

Well, I’m generally very anti-LLM but as a library author in Java it has been very helpful to create lots of similar overloads/methods for different types and filling in the corresponding documentation comments. I’ve already done all the thinking and I just need to check that the overload makes the right call or does the same thing that the other ones do – in that particular case, it’s faster. But if I myself don’t know yet how I’m going to do something, I would never trust an AI to tell me.

? the feature is still available in the US

I believe it should still work, as alarms trigger for me even if my phone updated overnight or I put it on the charger dead before going to sleep, but I’ll have to test it

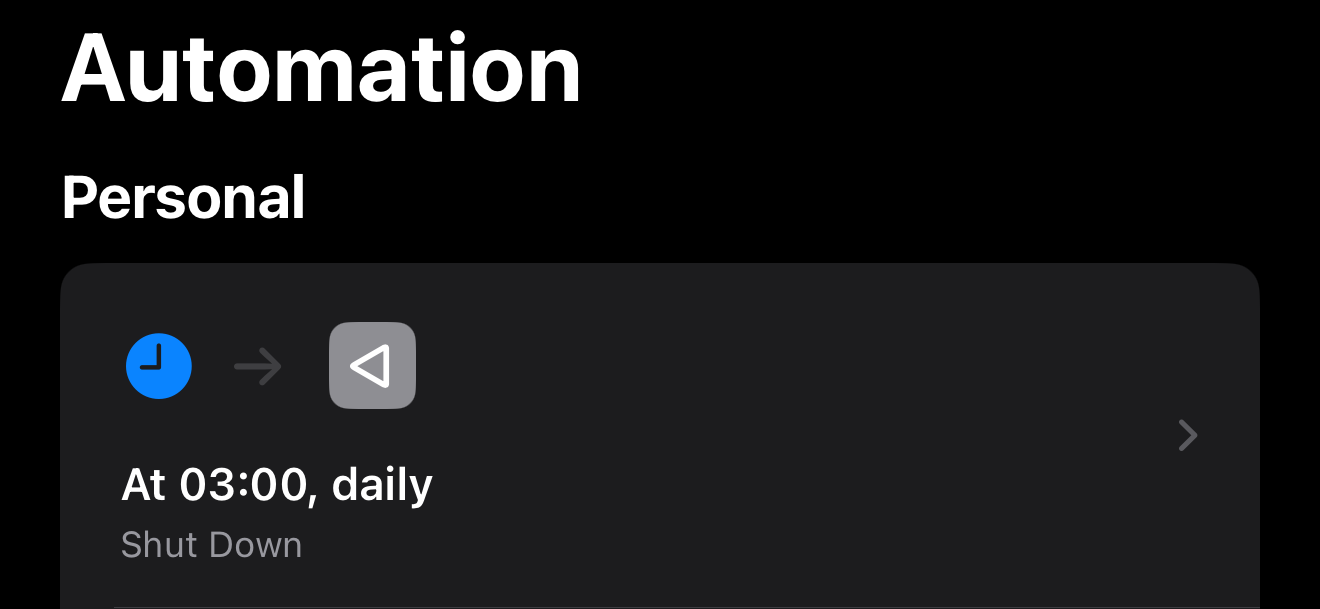

As the linked screenshot shows, you have the option to choose between shutting down and rebooting. There is no need to explain the difference to me, I demonstrated that the thing you want to do is possible.

You can, see my other comment: https://feddit.org/comment/3001525

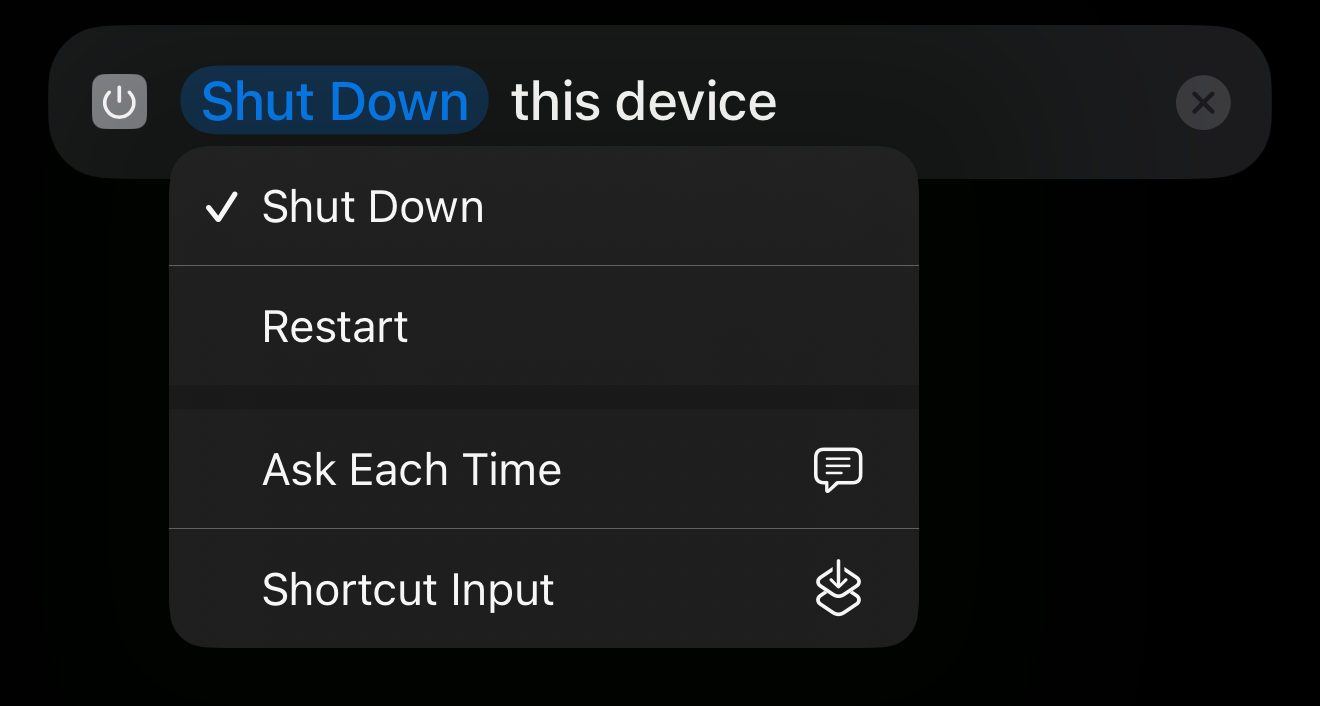

That’s not what I mean, I’m talking about the Shortcuts app:

There is a shortcut action to shut down the phone which you could trigger with an automation, I suppose.

Your reply refers to a “junior who is nervous” and “how the sausage is made”, which makes no sense in the context of someone who just has to review code

They’re saying developers dislike having to review other code that’s unfamiliar to them, not having their code reviewed.

Not to mention the law firm they hired advertises anti-union action, so that should tell you whether they can be trusted to be fair to workers…

If you read the linked article you will find that exterior cameras feeds are plenty invasive enough.

I don’t think they have interior cameras (although other manufacturers do), but the front and backup camera feeds provide plenty of information as well.

Then there’s also this, if you need any more reason to be concerned.

Their privacy policy includes a provision that they can use the cameras and GPS to infer things such as sexual orientation, so yeah.

Windows Recall, the screengrabber they were about to release with an unencrypted database as an opt-out feature.

Some Left Winger sees a Right Winger say something they don’t like. The Left Winger can’t counter it

Many right wing positions are very easy to counter with scientific evidence– climate change, crime rates, public health policy, social programs…

So if you think the “Left Winger” can’t counter it… do you not consider evidence-based arguments to be legitimate?

I’m an empirical researcher in software engineering and all of the points you’re making are being supported by recent papers on SE and/or education. We are also seeing a strong shift in behavior of our students and a lack of ability to explain or justify their “own” work