Developers: Those are rookie numbers

I’m going for the high score!

I’m an IT engineer, 100% of my time is spent on computer problems.

I’m a home server hobbyist. I like to think of them as computer solutions.

You don’t eat, sleep or go to the bathroom?

Someone call Harrison Ford, we have a replikant!

At least 10 percent of my time sitting in a classroom in college was waiting for the prof to get the projector to work with their laptop.

So far I am lucky enough to have not had any classes that have had the issue of a professor not being able to get their projector or computer to work.

Closest I had was the Linux VMs we were using for a Linux fundamentals class were having troubles because someone gave them too much resources by accident (I think it was memory but I don’t fully remember), causing them to sometimes just stop working because there wasn’t enough for every VM. Somehow persisted pretty much the whole quarter before being figured out.

“Up to 20%” is meaningless for a headline and is pure click bait. It could be any number between 0% and 20%. Or put another way, any number from no time at all to a horrifying more than an entire day per week.

Why not just state the average from what is probably a statistically irrelevant study and move on?

My job is to fix computers so I waste 100% of my time with computer problems.

“I’m here to fuck computers and chew bubblegum…”

At the same time?

If I have to.

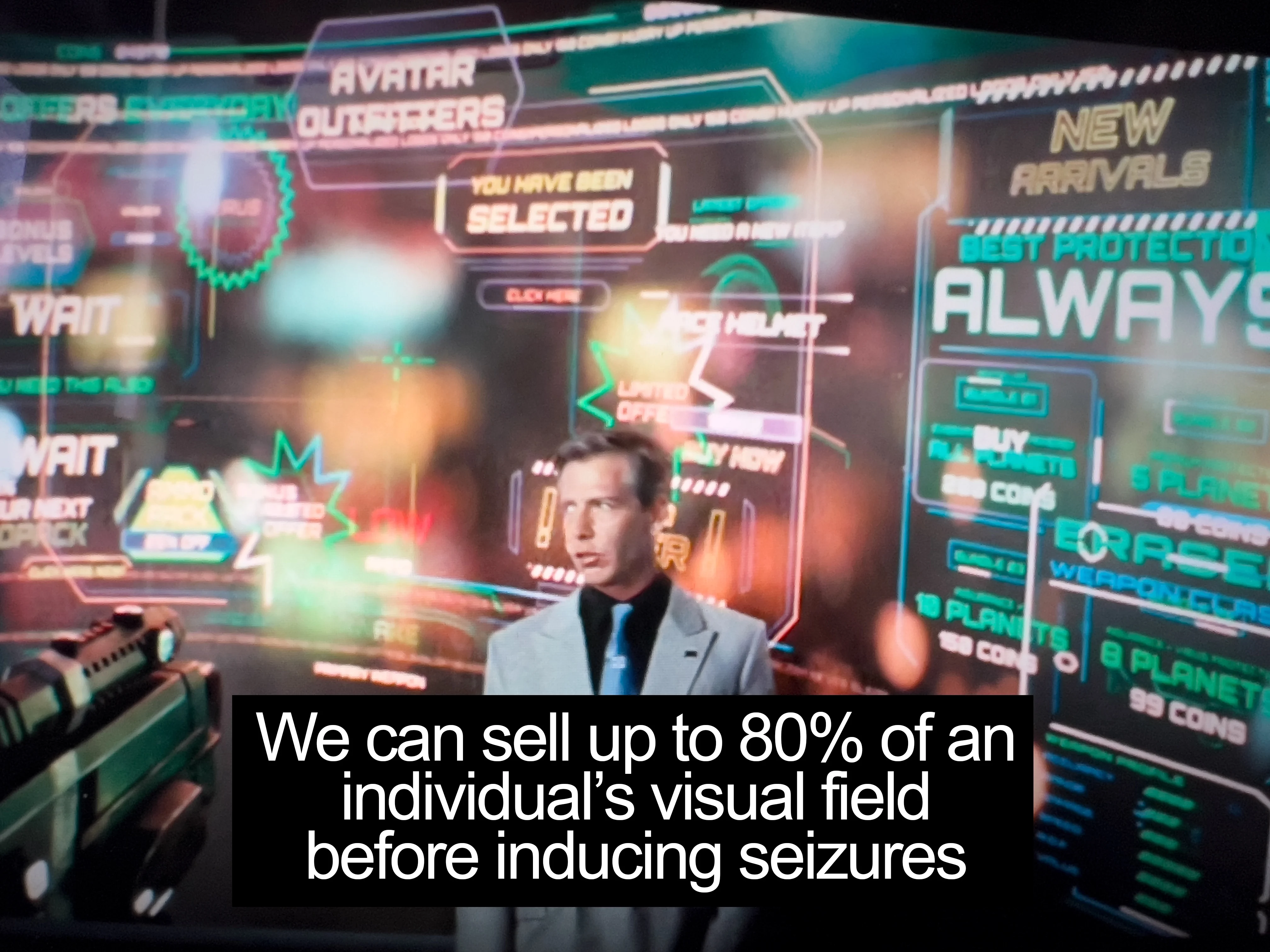

Do they include “fighting with anti patterns and dark patterns” as broken? It’s pretty insane how much misalignment there is between what most people want their computers to do and what the companies want people to do, which seems to largely be “look at ads literally everywhere”.

Personal computing is badly sick today.

Even for Linux users.

Why for Linux? Its always painted as Zion for matrix-dwellers?

It’s painted like that because it is. It’s the biggest bastion of freedom.

Well, because it’s still enormously complex and growing, and because, in user applications, comparing today’s XFCE to 2010’s XFCE is sad, and because comparing today’s Gnome to Gnome 2 in its prime is sad, and because comparing today’s KDE, eh, even to KDE4 - the same.

Because it’s becoming less and less logical, wave after wave people suffering NIH syndrome and\or thinking that mimicking MacOS or Windows is very smart erode it, and because the Web is ugly and becoming uglier.

And because CWM initial configuration takes 15 minutes to write and forget, and there’s no Wayland compositor which would take the same amount of time to set up for me, with the same easiness of use.

Anyway, what I wrote in that comment was a subjective feeling and I’m trying to rationalize it retroactively now, which is the same as lying.

Of course it’s what you said for Windows and MacOS users.

Most of my time is lost on cloud services that got shittier over time.

My personal computer just works on Linux.

How much time do we waste on car problems? Neighbor problems? Political problems? Grocery problems?

How much time do we waste on first-past-the-post problems?

Right and how much time do we save by having computers? Fixing the problems is just the cost of doing business

Yeah, this seems like a pretty dumb conclusion. I expect that as far back as you look, people always took advantage of tools that save them time. But then they always also spent a fair amount of that time (that they could have been working), just maintaining/fixing/making their tools. I think the truth is that computers are very useful tools, but the maintenance and troubleshooting can be quite time consuming.

I will continue using computers though.

How about everyone who has zero skills with these problems, do they count is 0% spent on them as they outsource it or do they count as 100% since the smallest problem incapacitates their computer usage?

Using the word “we” loosely.

deleted by creator

deleted by creator

It’s actually simple.

HIG, UX, ergonomics, all that - it doesn’t build up. Acceptable complexity of a pretty mechanical normal 80s’ UI\UX is the same as of a modern one. Humans don’t evolve over decades, they evolve over spans of time which are as good as eternity. They still need the same kind of complexity in tools they use.

A control panel for a loader that a factory worker should be able to use is as complex as a workflow on a computer can be. And that’s very explicitly accounting for the fact that loader’s or lift’s control panel doesn’t change every fucking day and the user remembers it, so computer UIs should be simpler than those of lifts and loaders!

You just don’t make UI\UX more complex than that. There are things humans can learn to do, and there are things they often can’t and they shouldn’t.

The issue is that this creates a bottleneck for clueless project managers, UI designers and such. They can’t throw together some shit in 30 minutes. They have to choose. They have to test. They don’t want that. And no regulation makes them do that, because if a loader has an unclear UI\UX, you might kill someone, while if an email program has that, you’ll just get very nervous.

A control panel for a loader that a factory worker should be able to use is as complex as a workflow on a computer can be. And that’s very explicitly accounting for the fact that loader’s or lift’s control panel doesn’t change every fucking day and the user remembers it, so computer UIs should be simpler than those of lifts and loaders!

I design control panels. I try to keep the workflow consistent not because I see value in it, but because some asshole decided that they didn’t want to pay for retraining. Really I don’t care, having to retool slightly every decade or so is pretty reasonable. Especially given that the tech is always changing.

Especially given that the tech is always changing.

Humans don’t. Changing things is fine, making using them more complex for the same result, because another decade has passed, is not.

It has to get more complicated, more edge cases have popped up and the process is more complicated.

Look basic example. I made an uncoiler and needed to add in a reverse override. Why? Because someone one time loaded it in wrong.

By “more complex” I meant making other operations slower (EDIT: and harder to understand) for somebody using it, so - not this example.

It’s not a waste if I’m getting paid to do it full time

That’s why I only use mentats.

Linux users brings the numbers up

Once everything is set up properly it just works tbh. Meanwhile in windows updates broke something every other time.

Really? Because I updated and my wine prefix just broke. That was yesterday.

Skill issue. I don’t update the wine binaries I use for my most used prefix. I use https://github.com/Kron4ek/Wine-Builds/releases I may setup a new one eventually and just migrate the data tho. Maybe once a year, so once per major release of wine.

I see, I was holding it wrong

This is so not true unless you are using some super stable old Debian release and aren’t doing complex work.

Most DEs are super buggy, especially the darling child kde, which right off the bat makes things not super stable.

Additionally some of the most loved distros are rolling release and inherently unstable.

Hell, I use multiple distros daily, fedora and slackware, I also use windows for work, windows is by and large more stable in my experience.

Slackware has kernel panics monthly, kde crashes on fedora, Wayland has too many problems to count, meaning I have to switch to x sessions all the time.

Most GUI software I use has tons of visual glitches.

Yes it’s tolerable, that’s why I still use it, but I wouldn’t exactly say it ‘just works’

I would estimate I restart my fedora computer about 4-5 times more often than than the windows computer, and usually I have to restart fedora because of serious hard crashes (e.g. kde crashes so hard that I can’t even switch to a tty, meaning I need to hard reset)

I’ve not had anything like that since… forever. But then I’m not a kde nor fedora user. Naturally raises the question - have you considered switching from kde, fedora or both?

If Linux “just worked” I would have switched years ago. I’ve used several distributions, always preferred Gnome to KDE, and even with “expert” help setting things up, I always spent way more time trying to make things work than actually having things work. Unless it’s a basic-ass workstation being used for minimal computer things or to run a server for something, there’s always something that doesn’t want to work.

I like the idea of Linux more than I actually like using Linux. :/

Fair enough. What stuff do you run on your regular week?

I use KDE on my Linux machine, which means that I cannot develop anything involving the GPU.

The moment I experiment a little with the API or give it wrong parameters, not only my program crashes, but the whole system freezes and I have to manually press the “power off” button.

It does happen in windows too, however it’s 100x less unlikely.

I also had a problem not long ago that crashing my program would not free the RAM, so every time I ran the program (and it crashed), I had 2-3GiB less of RAM. So I had to restart the computer every 10 runs or so.

Operating systems are supposed to isolate programs and manage their resources. A program crashing under no circumstances should affect any other program. I don’t understand how it can happen.

I can’t tell if you are joking. But just in case, my installation worked flawlessly for years.

I mean, that’s fine, but as a Linux user I’ve fucked around a lot and spent a lot of time fixing mistakes that I did not need to make.

I think I’m a pretty average Linux user. Who needs something that “just works” when you can break it by trying to add something you don’t need?

Yaa arch BTW guys!!

We are wasting up to 20% of our time with bronze problems.

– Some grumpy dude circa 3300 BCMust be the crappy copper from Ea-nāṣir

This. We used to waste time repairing the mechanical things when we could have been planting, or wasting time dealing with plant blights and livestock woes when we could have been hunting for wild game.

Some people still do. Fuck Jhon Deere.

Hack John Deere. And hoist the Jolly Roger when you get it working again!